[originally published on The Last American Vagabond]

“Can we forecast earthquakes? No. Neither the United States Geology Survey (USGS) nor any other scientists have ever predicted a major earthquake. We do not know how, and we do not expect to know how any time in the foreseeable future.”

-United States Geology Survey website

On the morning of February 6, 2023 the people of Turkey and Syria were struck by a devastating 7.8 magnitude earthquake, followed by a 6.7 aftershock and then a final (we hope) 7.5 M quake in the late afternoon. The effects of the three-fold quake struck deep into Syria and as of this writing, over 23,000 deaths, and 500,000 injured have been counted in Turkey and Syria, along with tens of thousands of injuries and incredible destruction to infrastructure.

Were it not for the political obfuscation that has derailed all fields of science over the past decades, then this tragic loss of life would have been entirely preventable.

How?

Because despite the clamorings of the priests of standard model geology managing the US Geological Survey, the fact is that earthquakes are completely forecastable.

Take the singular case of Dutch scientist Frank Hoogerbeets, representing the self-funded Solar System Geometry Survey (SSGEOS) who published the following tweet a full three days prior to the February 6th disaster:

Reflecting on the method he and other like-minded scientists use within the international forecasting community, Hoogerbeets explained:

“As I stated earlier…this would happen in this region, similar to the years 115 and 526. These earthquakes are always preceded by critical planetary geometry, as we had on the fourth-fifth of February”

What sort of “planetary geometries” is Hoogerbeets talking about?

It isn’t that Hoogerbeets uses a crystal ball, believes in astrology or has better data than the scientists of the US Geographical Survey, but rather that he is simply a real scientist who doesn’t believe in dogmatic procedures masquerading as “science” if they don’t actually work. His method of looking at “planetary geometries” as an important component to his success was laid out in a three minute introductory video Earthquakes and Electro Magnetic Waves:

It should also be noted that this was not Hoogerbeets’ first successful forecast.

On February 2, 2023, the SSGEOS published that there was “potential for stronger seismic activity in or near the purple band (indicating the east side of South America) in 1-6 days.” This warning was followed by a February 5, 2023 5.6 magnitude earthquake that struck Cuiquimbo Chile.

On January 29, 2023, SSGEOS predicted stronger seismic activity in an area which he outlined on a map as southern China and northern India. This was followed within a day by a 5.8 magnitude earthquake that hit southern Xinjiang.

Since setting up the SSGEOS in 2014, Hoogerbeets and his team have made hundreds of successful forecasts which stand in loud contrast to their mainstream rivals whose commitment to statistical probability theory, and linear computer modeling have resulted in dismal failure consistently for decades.

What sets Hoogerbeets apart from the statisticians who have come to dominate the field of seismology is simply his emphasis upon the electro-magnetic, chemical, and galactic properties of earth’s dynamics.

Unlike the modern “seismologists” who assert that everyone must adhere to the absurd “elastic rebound theory”, which presupposes the sole cause of earthquakes is located within tectonic plates and gravitational forces, those scientists who make successful predictions in this contentious field choose instead to focus on the electromagnetic properties of the earth and broader solar system (and galaxy) shaping the earth’s environment.

As Hoogerbeets states:

“Based on our research, it appears that gravity is not responsible for larger earthquakes at the time of critical planetary and lunar geometry. The most likely force acting on Earth’s crust at the time of critical geometry is electromagnetic. This could also explain the lightning in Earth’s atmosphere prior to larger earthquakes which could be the result of atmospheric forcing induced by electrogmagnetic charge from critical geometry between celestial bodies in the solar system.”

Throughout Hoogerbeets’ writings and educational videos, the Dutch forecaster explains that space between planets and between stars is not empty but permeated by subtle but efficient magnetic fields, and electric currents which feed into each of the planets, moons, and sun. The analogue used for this process is not a computer model with abstract notions of “gravitational forces pulling on objects within empty space” as is so often the case, but rather an electrical process with the sun acting as a form of dynamo and the planets acting as both antennas that simultaneously receive, transform, and emit signals according to certain specific wavelengths.

Quoting RCA Radio Engineer John Nelson whose 1500 atmospheric condition forecasts in the 1960s were made with a 95.2% accuracy, Hoogerbeets wrote:

“The similarity between an electrical generator with its carefully placed magnets and the sun with its ever-changing planets is intriguing. In the generator, the magnets are fixed and produce a constant electrical current. If we consider that the planets are magnets and the sun is the armature, we have a considerable similarity to the generator”

This property of the planets and moons within the solar system was confirmed by the Voyager and Cassini satellites which recorded specific EM waves emitted from all planets ranging from radio wave, microwave, infrared, and even smaller wavelengths.

It was also outlined beautifully by Safire project lead scientist Dr. Michael Clarage in his recent 16 minute video, “Function in the Cosmos”:

Admittedly, what causes the EM emissions/absorption between planets is not understood. Also not fully understood is how these emissions influence activity both within the atmosphere, ionosphere of the earth — not to mention the deep crust, mantle, and core of the earth. Humans have, after all only pierced 16 km through the 60 km crust and have no direct knowledge of the mantle or lower.

Despite our ignorance of so much, we do know some things about the magnetic fields and resonances within our solar system, and simply acknowledging this reality and its influence on the affairs of earth is itself the first step to making a discovery… which is more than can be said of the standard theory gatekeepers attempting to keep new discoveries from emerging.

Core Precursors to a Science of Earthquake Forecasting

One of the factors which appear to be playing a much larger role within the science of earthquakes involves the chemical secretions of elements like radon from ground water near earthquake epicenters days and hours before and after an event.

What causes the release of radon is still unknown but this was what technician Gianpalo Giuliani was looking at when he predicted a 2009 earthquake that would strike l’Aqila Italy days later.

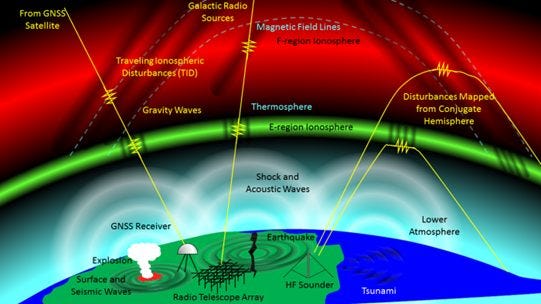

Another particularly important variable in earthquake forecasting involves the behavior of the large layer of ionised plasma surrounding the earth beginning at 40 miles and stretching to 600 miles above the surface. This zone is called the ionosphere and is replete with electrons and electrically charged atoms and molecules driven by the constant fluxes of radiation (mostly UV and Xray) emitted by the sun, but also influenced by the EM pulses of other planets within the electrical circuit that is our solar system.

As Sergey Pulinets described in his Principles of Organizing Earth Quake Forecasting based on Multi Parameter Sensors (October 16, 2020): “In the case of ionospheric precursors, the precursor… manifests itself in the form of a strong positive variation of the electron concentration over the earthquake preparation zone”

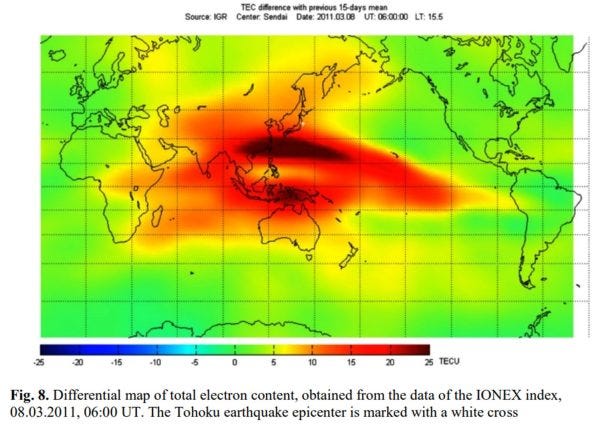

In the relatively recent case of the deadly magnitude 9.1 earthquake that struck Japan, it resulted in the tsunami that smashed into the Japanese coast in March 2011 killing over 20,000 and leaving $38 billion in damage in its wake. As can be seen in the graphic below, this tragedy would have been entirely forecastable had anyone looked at the spike in electron density in the ionosphere above the epicenter which began eleven days prior to the disaster as demonstrated during a forensic analysis by Chinese researcher Fuying Zhu at the Wuhan Institute of Seismology in August 2011.

Another study conducted by Japanese seismologist Kosuke Heki not only substantiated Zhu’s findings but went further back and found the same electron fluxes in the ionosphere days before the 2010 magnitude 8.8 earthquake struck Chile killing 524 people and prior to the 8.3 Hokkaido earthquake in 1994.

In 2011, a team of researchers began pouring over data accumulated by the DEMETER satellite which was the most advanced satellite designed to trace earthquake precursors from space while it was operational between 2004-2010. The researchers were looking for any electromagnetic anomalies that would have given the government of Haiti time to foresee the 7.0 earthquake that took the lives 250,000 people on January 12, 2010.

The team published a paper on their findings where they wrote: “One day (11 January 2010) before the earthquake there is a significant enhancement of electron density and electron temperature near the epicenter… Statistical processing of the DEMETER data demonstrates that satellite data can play an important role for the study of precursory phenomena associated with earthquakes.”

As is the case in most instances of electromagnetic/chemical precursors, the project had no budget to pay for any staff to analyse the data in real time, and thus nothing was seen or done.

Earlier work on successful forecasting which turns the supposed rules of ‘elastic rebound theory’ upside down include the work of Stanford electrical engineer Dr. Antony Frasier Smith who accurately forecast a Magnitude 7.1 Loma Prieta earthquake in the San Francisco Bay Area California two weeks before it struck on October 17, 1989. Dr. Frasier-Smith had installed sensors near the eventual epicenter of the quake which noticed a 20-fold spike in ultra low frequency (ULF) radio waves 14 days before the shock, and which rose to a 60-fold spike above average three hours prior to the event.

Similar precursors were observed by researchers in Armenia before a magnitude 6.9 earthquake in December 1988 and again days prior to a magnitude 8.0 earthquake in Guam in August 1993.

Inspired by Dr. Fraser-Smith’s 1989 forecast in California, a scientist named Tom Bleier set up Quake Finder in Palo Alto California in 2000 which currently oversees a network of 125 magnetometers around the San Andreas Fault which makes up the massive earthquake dense zone called the Ring of Fire stretching from Japan around Russia, Alaska and the western coast of the Americas. Working with a group called ‘Stellar Solutions’, Bleier’s team has spent 20 years accumulating evidence of similar precursors that have occurred before dozens of small to medium earthquakes.

Another team of researchers took Frasier-Smith’s insights and reviewed the case of the massive Taiwanese earthquake of September 22, 1999 that resulted in 2500 deaths and $300 billion of damages. Not only did this team discover the ULF signals days in advance, but also found multiple points of connection to solar wind streams that accompanied those ultra low radio emissions that emerged from at least 8 km below the earth’s surface.

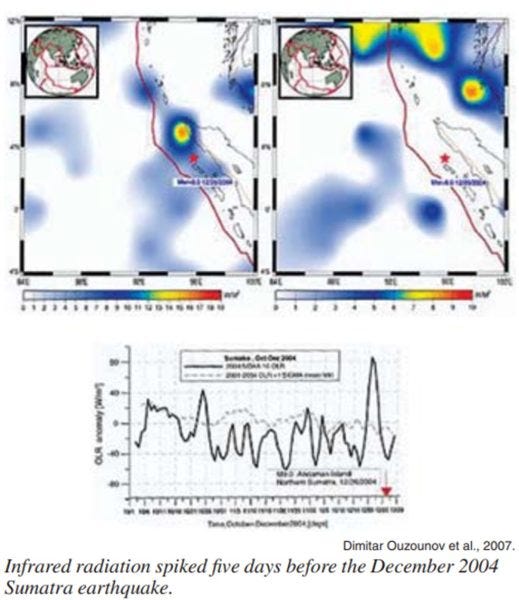

Another electromagnetic precursor that has borne fruit has been infrared emissions which also spike prior to large earthquakes. This was observed by NASA’s Terra Earth Observing satellite on January 21, 2001 which caught such “thermal anomalies” in Gujarat India five days prior to a 7.7 magnitude earthquake that killed over 20,000 civilians and destroyed 350,000 buildings. This anomaly disappeared immediately after the quake ended.

As can be seen in the image below, the magnitude 9.3 earthquake/tsunami that killed 228,000 people in Sumatra, Indonesia on December 26, 2004 was preceded by an anomalous spike in infrared radiation five full days before the tragedy. Unfortunately due to the dismissal of this entire field of science as “fringe” heresy, these precursors are either not listened to, OR they were only discovered AFTER the disasters struck as no financial resources were made available to staff the facilities needed to interpret the data in real time.

There are many more cases of earthquake forecasting which could have been raised that take into account all those parameters mentioned above and more.

Keplerian Roots of Modern Forecasting

It is important to hold in mind that this is not a new or ‘fringe’ field that emerged in recent history, but goes back literally millennia. Perhaps the earliest outline of planetary geometries and harmonics playing a direct role upon the material conditions of nature on earth was developed in the Timaeus dialogue by Plato in 360 BCE.

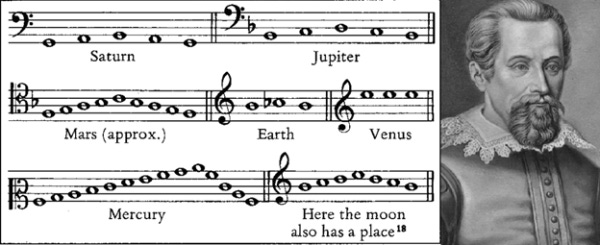

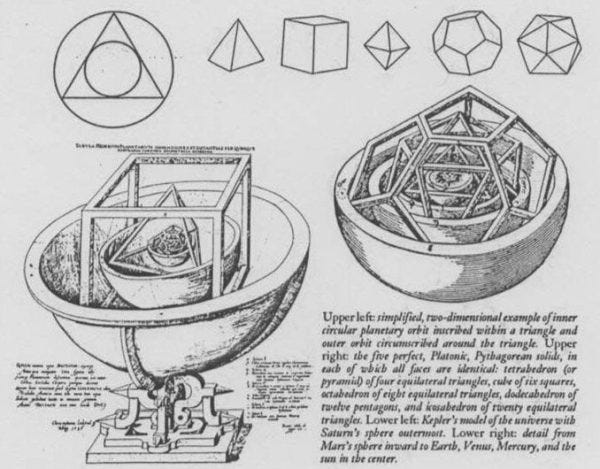

While the Pythagorean study of the harmony of the spheres and the lives of humans remained in the realm of philosophy for two millennia after the Timaeus was written, it was the scientist Johannes Kepler who first established an actual science of astrophysics and planetary forecasting with his Mysterium Cosmographicum (1594), followed by his New Astronomy (1609) and culminated in his Harmonies of the World (1619).

It was in this last work which saw Kepler consummate 30 years of research on the Pythagorean hypothesis and shaped his famous third law (aka: harmonic law) of planetary motion.

In Book 4, Chapter 7 of the Harmonies of the World, Kepler writes:

“The view that there is some soul of the whole universe, directing the motions of the stars, the generation of the elements, the conservation of living creatures and plants, and finally the mutual sympathy of things above and below, is defended from the Pythagorean beliefs by Timeaus of Locri in Plato… a Christian can easily understand by the Platonic mind, God the Creator and by the soul, the nature of things” [p. 358]

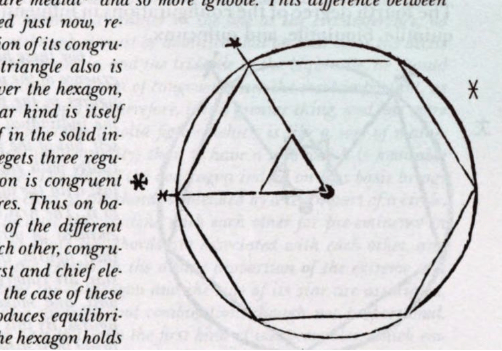

Kepler worked through several chapters outlining the planetary and lunar geometries (which he dubbed ‘aspects’) that conform to visual harmonies in the form of archetypal angles generated from elementary polygons. Those elementary geometries include, but are not limited to triangles, squares, pentagons, hexagons and octagons as well as the internal angles generated from these shapes. With this accomplished in book three of his Harmonies, Kepler outlined a set of angles that define specific quantized states using the earth’s relationship to various planets, the moon, and the sun.

Kepler was no numerologist and recognized that numbers were not self-contained causes but rather the effect of those archetypical shapes that permeated all physical space-time. For example, numbers like 3, 4, 5, 6, 8 would be expressed by the elementary shapes (triangle, square, pentagon, hexagon, octagon) that can then be combined into the five Platonic Solids and 13 Archimedean polyhedra.

When nested into each other, these Platonic solids determine a set of proportions which Kepler used to guide 30 years of research into the causes for the positions of the planets around the sun which he also speculated was moved by an electric fluid within his New Astronomy[1].

Internal angles contained within the elementary shapes are also treated as properties of qualities rather than self-contained quantities. For example: Squares generate internal angles of 90, while triangles feature internal angles of 60 and 120 degrees. Pentagons generate internal angles of 135 and 72 degrees while hexagons generate internal angles of 120 and 60 etc.

In his Harmonies of the World, Kepler demonstrates the musical proportions of these numbers as functions of resonance/consonances demonstrating a model of the solar system built on the well-tempered musical scales featuring both major and minor modes.

Within Book Four of the Harmonies, Kepler breaks from the astrologers and statisticians dominating the ‘standard models’ of his day by outlining various verifiable weather phenomena that coincide with these “aspects” saying: “I was moved to that… only and solely by observation of the weather and study of the aspects by which it is excited. For I saw that with great consistency the state of the atmosphere was disturbed whenever planets were either in conjunction or configured in the aspects commonly spoken of by the astrologers. I saw that there was generally calm in the atmosphere if few or no aspects occurred or if they were quickly completed or concluded. Indeed I considered that this business should not be considered so lightly as the common herd of forecasters usually does.”

Later on, Kepler discusses various weather phenomena and their correlation with various geometrical configurations of the solar system saying:

“I took account of consistent experience, not indeed concentrating in that way on snows in particular, or winds, or thunder and the other things which astrologers usually predict, but observing in general that the state of the air was disturbed in some way or other if there were aspects, for example if Mars and Jupiter were in conjunction, and were peaceful or if there were not any [conjunctions].”

Gauss-Weber Pioneer the Electric Model of the Atom and Universe

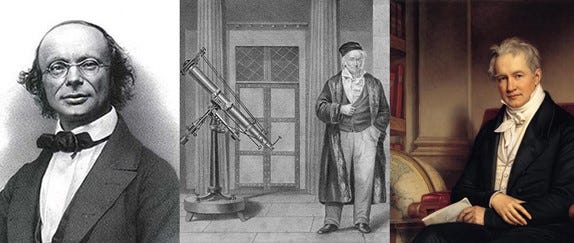

Later on, German scientists Carl Gauss (1777-1855) and Alexander von Humboldt (1769-1859) developed the Keplerian model of a universe of harmony even further by leading an international scientific program to chart the invisible magnetic field shaping the world which was accomplished in 1838.

In Gleismeier and Tsurutani’s brilliant 2014 study Carl Friedrich Gauss – General Theory of Terrestrial Magnetism, the authors write:

“As Gauss stated in a letter to his friend Wilhelm Olbers (1781–1862), he was interested in the terrestrial magnetic field as early as 1803. This interest was greatly stimulated after meeting Baron Alexander von Humboldt (1769–1859) and Wilhelm Weber (1804–1891) in Berlin in 1828. After 1831, his major collaborator was Wilhelm Weber. Inspired by Alexander von Humboldt, Gauss and Weber realized that magnetic field measurements needed to be done simultaneously and globally with standardized instruments. This research program led to the foundation of the Göttinger Magnetischer Verein in 1836, an organization without much formal structure, only devoted to organizing magnetic field measurements throughout the world.”

Gauss was also a forecaster of the highest order who was the first to discover the location of the asteroid Ceres in 1801 which verified an earlier forecast made 200 years earlier by Kepler who stated that the gap between Mars and Saturn would necessarily contain a planet (in this case it appears that the asteroid belt is either a residual of a former planet or the material that may someday form into a planet).

Gauss was also the first scientist to recognize the necessity for a layer of charged electrical current above the stratosphere in order to contain the radio signals passing across the surface of the globe, and would be verified by the discovery of the ionosphere in 1929.

It was also Gauss’ close friend and collaborator Wilhelm Weber who pioneered the Keplerian hypothesis of harmonic relations shaping the frequencies of space into the domain of the micro universe. In the 1850s, Weber actually became the first scientist to measure the exact distance of an electron circling the orbit of a nucleus (which he successfully did without actually seeing the electron or nucleus).

Neither Weber, nor Max Planck, who later picked up the torch which Weber left to posterity, saw a schism between the macro universe in the large and the micro universe in the small. For these scientists, keys discovered in one domain were also valuable in unlocking doors of the other domain.

Max Planck Stands up for Truth

It is thus no small irony that Planck’s success in founding a new science in the quantum world was motivated by his commitment to Kepler’s method that trumped “the common herd of forecasters” of the 17th century.

In his Where is Science Going? (1932), Planck warned of the corruption of science and forecasting caused by the spread of the statisticians and formalists who lacked a creative flexibility and love of truth needed to continue the momentum of new discoveries that had been opened up by the great minds of Planck’s generation. The old musician/scientist contrasted Johannes Kepler with his contemporary Tycho Brahe who both had access to the same date, although only one had the spark of love of truth that ushered in the creation of a new physics. Max Planck wrote:

“Kepler is a magnificent example of what I have been saying. He was always hard up. He had to suffer disillusion after disillusion and even had to beg for the payment of the arrears of his salary by the Reichstag in Regensburg. He had to undergo the agony of having to defend his own mother against a public indictment of witchcraft. But one can realize, in studying his life, that what rendered him so energetic and tireless and productive was the profound faith he had in his own science, not the belief that he could eventually arrive at an arithmetical synthesis of his astronomical observations, but rather the profound faith in the existence of a definite plan behind the whole of creation. It was because he believed in that plan that his labor was felt by him to be worth while and also in this way, by never allowing his faith to flag, his work enlivened and enlightened his dreary life. Compare him with Tycho de Brahe. Brahe had the same material under his hands as Kepler, and even better opportunities, but he remained only a researcher, because he did not have the same faith in the existence of the eternal laws of creation. Brahe remained only a researcher, but Kepler was the creator of the new astronomy.”

The Statisticians Play Dice with Truth

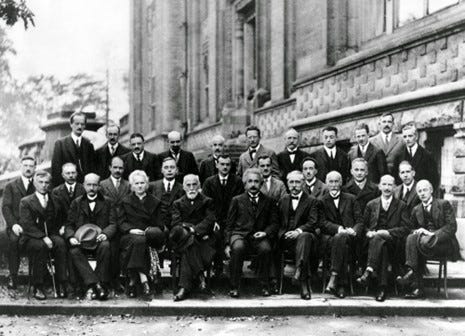

While it is under appreciated today, as Max Planck was saying these words amidst the rise of a new techno-feudal system of fascism in his native Germany, a pitched battle was being waged over what direction science would go in the 20th and 21st century.

On the one side stood Planck, Einstein, Madame Curie and other great scientists who actually made revolutionary discoveries into the universe, and on the other side stood the mathematical statisticians led by the “Copenhagen school” of Niels Bohr, Max Heisenberg, Wolfgang Pauli. This latter school of probability theorists correctly demanded that a new science was needed due to the anomalous data emerging in the realm of the quantum and new studies of deep space which couldn’t be explained by the “classical model” of Newtonian science.

The fact that this new school of statisticians never discovered anything didn’t stop them from being dubbed the victors of the Solvay Conference in 1927. In the wake of this battle, pioneering scientists like Max Planck, Albert Einstein, Mendeleyeev and Marie Curie were placed in the same category as rigid “classical” positivists of the Newtonian sect (including Ernst Mach, Bertrand Russell, Rudolph Clausius and David Hilbert) who demanded that the only definition of “truth” acceptable in the realm of science had to be mathematical perfection.

According to these anti-creative positivists, IF it could be proven that mathematical perfection were an impossible ideal, then TRUTH ITSELF had to be rejected as having any assumed existence.

By treating all scientists that believed in truth as “positivists”, a straw man was created which the young Copenhagen statisticians jumped on. Since the universe could be demonstrated to be shaped by a non-linearity and elements of uncertainty (evidenced by Kurt Gödel’s famous 1932 proof) that denied the possibility of absolute mathematical truth, it was asserted that only the science of “dice rolling” (aka: statistical probability) be permitted by scientists wishing to conduct any experimental research on reality — either of the atomic world, or even in the macrocosm. This was the context shaping Einstein’s famous statement to Niels Bohr that “God does not play dice with the universe”.

In this perversion of science, randomness and uncertainty became presumed “laws” in the domain of the quantum in the very small, while a stiff mechanistic determinism became the assumed dominant law of the macrocosm in the very large. It didn’t take long for these contrary impulses to become forced together into something called “Standard Model Cosmology” which became a soulless dead corollary to “Standard Model Quantum Mechanics” during the Cold War.

And within the insanity of the shadowland of lies that was the Cold War, the fear of nuclear annihilation increasingly swept the love of truth in science away, and the unbounded financial resources of the monstrous military industrial complex absorbed cutting edge scientific work into the classified world of black budgets and espionage with no connection to the benefit for the civilian sector or universal knowledge more generally. Scientists who didn’t conform to the new normal were increasingly purged from the scientific establishment as a newer generation of cognitively handicapped scientists emerged onto the scene, leaving nothing unaffected by their toxic irrationalism.

The Cancer Metastasizes: Economics, Ecology, and Geology Infected

All of a sudden, scientists were told to accept the deterministic rules of a universe that supposedly emerged out of nothing exactly 13.7 billion years ago, and would die a slow heat death in some linear extrapolation into the future. While this fatalistic determinism was enforced from the top down, a fatalistic indeterminism was enforced from the bottom up whereby scientists had to accept that nothing could be known of the specific principles shaping the existence of protons, electrons, or other sub-atomic behavior. Every system in the universe from organisms, human economies, galaxies, and solar systems were assumed to be both rigidly closed and deterministic AND ALSO random, fluid, and irrational.

This self-contradictory dualism embedded as a Trojan Horse not only derailed discoveries in atomic science (with fusion power increasingly dubbed ‘the impossible dream of forever being 30 years away’), but also in political economy and climate science.

In economics, this dualism was unleashed with the post-1971 floating of the US dollar onto global speculative markets as a new consumer society cult was imposed onto the western world. Under this new era that became known as “globalization”, economics was defined as the hedonistic pursuit of pleasure driven by atomized consumers which were likened to gas particles stochastically bumping around within an aerosol can. The macro-system (aka aerosol can) in which the “markets” were located was increasingly shaped by a new technocratic class of “scientific engineers” who would impose closed system determinism onto humanity in a bid to maximize the “perception” of freedom, with none of the actuality of it.

Forecasters in this new surreal wonderland were told that they could extrapolate present trends into the future using probability functions, but they could not think about boundary conditions shaping the invisible (albeit real) constraints shaping those very economies they sought to influence.

In climate science, computer models were imposed onto a field which once took the sun, fluctuating magnetic fields, cosmic radiation, and broader galactic environment into consideration. Instead of thinking about top-down factors like solar wind, magnetic fields, and cosmic radiation determining earth’s climate, the new generation of climate scientists trained by Club of Rome computer models during the 1970s and beyond increasingly found themselves mentally handicapped by the acceptance of dualistic absurdities.

Chief among these absurdities was the assumption that although predicting short term weather patterns were intrinsically unknowable (beyond statistical probability functions), it was absolutely certain that the globe would heat up in a new furnace within a century.

In the geological sciences, things did not fare much better.

While real scientists were making pioneering discoveries into earthquake science by observing the magnetic and planetary/solar alignments of the solar system through the 1930s-1960s, the false dualism again asserted itself as the new era of computer modeling emerged onto the scene.

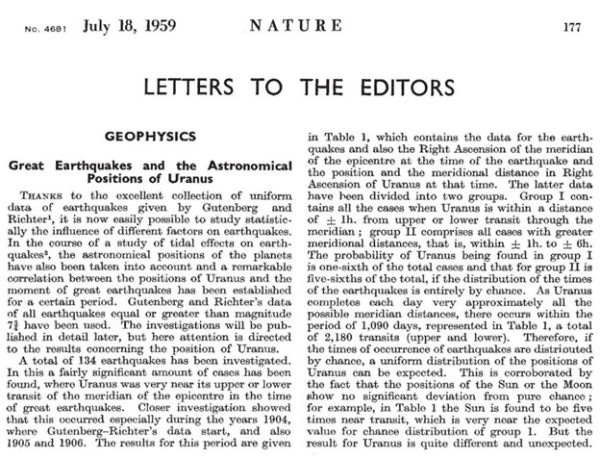

Compare the article from the July 18, 1959 edition of Nature magazine, showcasing the new insights into earthquake forecasting, with the modern gospel of the United States Geographical Survey (which sets the standards for all “acceptable educational practices” across the trans-Atlantic):

Concepts such as those published in the 1959 Nature magazine became increasingly verboten over the years to the point that the current US Geological Survey official website addresses the question “can earthquakes be predicted with the following answer:

“No. Neither the USGS nor any other scientists have ever predicted a major earthquake. We do not know how, and we do not expect to know how any time in the foreseeable future. USGS scientists can only calculate the probability that a significant earthquake will occur in a specific area within a certain number of years. An earthquake prediction must define 3 elements: 1) the date and time, 2) the location, and 3) the magnitude.”

Unless one is able to satisfy the impossible standards set by the priests at the USGS (demanding mathematically PERFECT results in predicting the exact date and time, exact location, and exact magnitude of an earthquake)… if there is even a slight deviation from mathematical perfection between forecast and empirical result, then it is asserted that no forecast is made. The irony of course, is that if scientists like Kepler, Weber, Gauss, or Planck actually used the standards promoted by the scientists at the USGS, then none of their discoveries could ever have been made.

Those scientists wishing to make actual discoveries in this new field of earthquake forecasting, which would do much to expand humanity’s knowledge of the cosmos and also save countless lives, would be much better rewarded eating some humble pie, spitting out some “elastic rebound” kool aid and thinking like Kepler, Gauss, Planck and Frank Hoogerbeets.

Footnote

[1] Kepler always maintained that magnetism was the form that this species of attraction and motion took, saying: “Therefore, as the sun forever turns itself, the motive force or the outflowing of the species from the sun’s magnetic fibres, diffused through all the distances of the planets, also rotates in an orb and does so in the same time as the sun, just as when a magnet is moved about, the magnetic power is also moved, and the iron along with it, following the magnetic force.”

The author developed some of these concepts in a recent episode of the Great Game viewable here:

Alot of people have been writing to me about HAARP as an earthquake-creating weapon:

From everything I've studied which are included in my new essay on Earthquake forecasting featured on this channel, all signs are pointing to harmonics of planetary geometries (which include considerations of the configurations of the planets, moons and sun within our system) and the associated electric circuit shaped by magnetic fields that in turn shape the currents and interstellar cosmic radiation that flows through our galaxy and into our earth. These are the dominant factors shaping volcanism, earthquakes, ice ages and weather more broadly. Haarp can certainly influence the ionosphere which I'm sure can influence weather patterns such as wind currents and rain cycles but I have seen no solid evidence that the ionosphere is a cause of major quakes. It is one of a handful of precursors several degrees removed from the actual causal nexus which are driven far more by our solar system, and broader galaxy. As an analogue, I mean this in the same way that most people who died with AIDS didn't die because of aids - but because of Fauci's drug protocol

Dutchsinse on YouTube predicts earthquakes and shares his methods to anyone who wants to learn.

Some people don’t like that. He also published pictures of DEWs starting fires in Oregon with beams of light. Another unpopular discovery some people don’t want to hear about.